Making a twitter video frame bot, the silly way

Update 2023

Due to changes with the Twitter API, this project is not currently running and the portions dealing with the Twitter API may no longer be applicable.

Thank you for your understanding.

This page details my steps in setting up and operating the twitter monogatari robot.

A previous attempt at running a Monogatari Series twitter bot died under mysterious circumstances, and to the best of my knowledge, nobody else started a slot-in replacement. Spotting a gap in the twitter clout market, I sprang into action and assembled this minor travesty.

These steps are broadly replicable, if you really really wanna do something similar.

Step 1 : Preparing the frames

Pretend, for the sake of argument, that you have your target show and movies on DVD/Blu-ray (I do, actually). If you have a computer with a disk drive, you can use makemkv to rip the video files right off the disk. For liability reasons, that's the route I'm recommending. Name these files in a way that a computer can easily sort into the right order. I've named the season folders with a leading number, and the episodes within with a trailing number. "04.Nekomonogatari Kuro\Nekomonogatari Kuro 01.mkv", for an example. Doing this now saves having to write any sort logic and makes tweaking the results later easy.

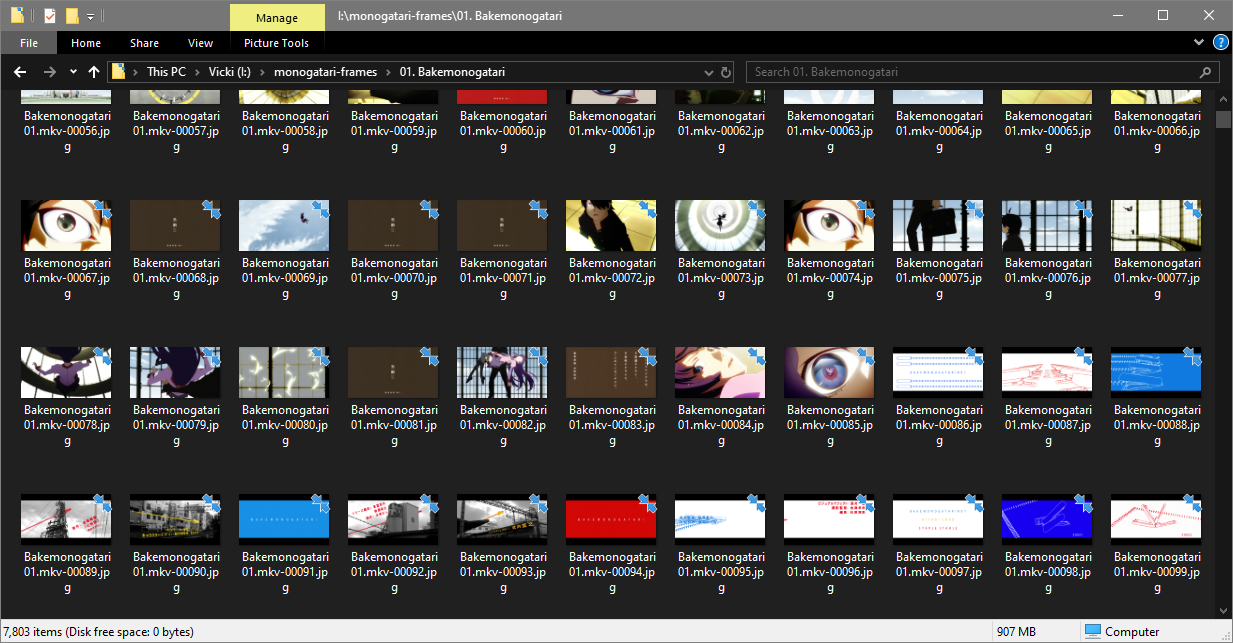

I started by dumping every single goddamn frame into a folder. That was a bad idea and froze my computer up when opening said folder to inspect the results. Thinking about what exactly I wanted, I realised that literally every frame was the wrong way to go about things. It would be overkill and tremendously boring for long stretches of time, as well as a burden on disk space. I wanted the moments, the cuts, the good shit. The obvious solution in my head was to extract the I-Frames out of the video file itself. When a video is saved on a computer, it is first compressed to save space. With nearly every video format, the two types of frames are I-Frames, effectively keyframes that store the entire picture, and B-Frames, which tween between the I-Frames. I figured that by just grabbing the I-Frames, I'd have all the scene cuts and large motions without any of the weird goofy tween frames that come between them. This mostly worked out, as you'll find shortly. I quickly stack-overflowed together a quick thing in Batch, everyone's favourite scripting language, to scan a directory structure, slurp out the .mkv video files, dump every single I-Frame from every video, then carefully recreate that structure elsewhere on a disk and move the newly created .jpg files over to that.

Install ffmpeg somewhere handy and change the paths if you use this.

echo off

FOR /R "I:\projects\MonogatariSeries\" %%D IN (.) DO (

mkdir "I:\monogatari-frames\%%D"

SET D=!D:~0,-4!

CD %%D

FOR %%F in (*.mkv) do (

ffmpeg -i "%%F" -f image2 -q:v 2 -vf "select='eq(pict_type,PICT_TYPE_I)'" -vsync vfr "%%F-%%05d.jpg"

)

move *.jpg "I:\monogatari-frames\%%D"

cd ..

)From a tree of input files

01.Bakemonogatari

Bakemonogatari 01.mkv

Bakemonogatari 02.mkv

...

02.Kizumonogatari

Kizumonogatari 01.mkv

...

...

this produces an output of;

01.Bakemonogatari

Bakemonogatari 01.mkv-00001.jpg

Bakemonogatari 01.mkv-00002.jpg

...

Bakemonogatari 02.mkv-00001.jpg

Bakemonogatari 02.mkv-00002.jpg

...

02.Kizumonogatari

Kizumonogatari 01.mkv-00001.jpg

Kizumonogatari 01.mkv-00002.jpg

...

...

et cetera. Pretty good!

that disk has run out of space again

that disk has run out of space again

This worked well enough to get started, and I didn't scrutinise the output too closely. Long stretches of silence or minimally animated dialogue sequences compressed down to a frame or two, while bursts of rapid cuts unfolded into 10, 20 frame strings. Looked good to me.

Step 2 : Posting the frames

To post on twitter, you need an account. Luckily I had an old one sitting around originally intended for something else, so no need to sign up another. I gave it a new name, some paint and signed up to the twitter developer API. On that page you have to create an app, answer some basic questions justifying it's existence, and generate new credentials for it. In doing so, I learned you can name it literally anything you want, so I dubbed it koyomibot, which is now nicely emblazoned beneath every tweet made by it.

Rather than rely on internet hosting, I wanted a local solution so I could have direct access to the files and fuck with it at a moments notice. With that in mind, I completely ignored websites offering automatic bot creation and hammered my own out. The typical language for creating bots and scripts and other computer wizardry is renowned scripting snake Python. Despite having only minimal experience with, and a mild dislike of, Python, it turned out to be the perfect fit.

Posting to twitter was beyond simple. Python's true strength lies in the sheer number of easily importable libraries. I had a quick look around and my first pick, Tweepy turned out to be an easy fit. You install, import it, start it up with your API credentials, feed it post data and it just works.

The script I wrote is pretty straightforward, it functions thus;

- Read the pre-sorted frame directory to an array

- Load a state file containing the last filename visited

- Transform that filename into an index in the frame array and set the pointer to that index

- Log into twitter via the Tweepy library

- Loop through the frame array.

- Grab the filepath from the folder array

- Submit that file to Twitter

- Store the next file in the sequence to the state file.

- Sleep for 20 minutes

- Select the next index

- GOTO 6.

The source can be found in my notes page

The state is stored to a "state.txt" file that lives in the same directory as the script. Mess with it and things break, it's surprisingly encoding-sensitive.

As written, the index counting is a little contrived. The sleep timer method for delaying a post isn't perfect, it will slowly drift as it doesn't take the upload/state store time into account. Initially the bot would just crash out if it encountered an error. After an embarrassing spell where it was down for an entire 8 hours once, I added some exception handling code that catches errors and attempts to post again. It also has no functionality to loop back to the start. I anticipating just deleting the state file and rebooting it.

Don't use my code, is what I'm saying. It's WTFPL licenced, so you can use it, but if you have even a modicum of Python knowledge, you'd be better writing your own.

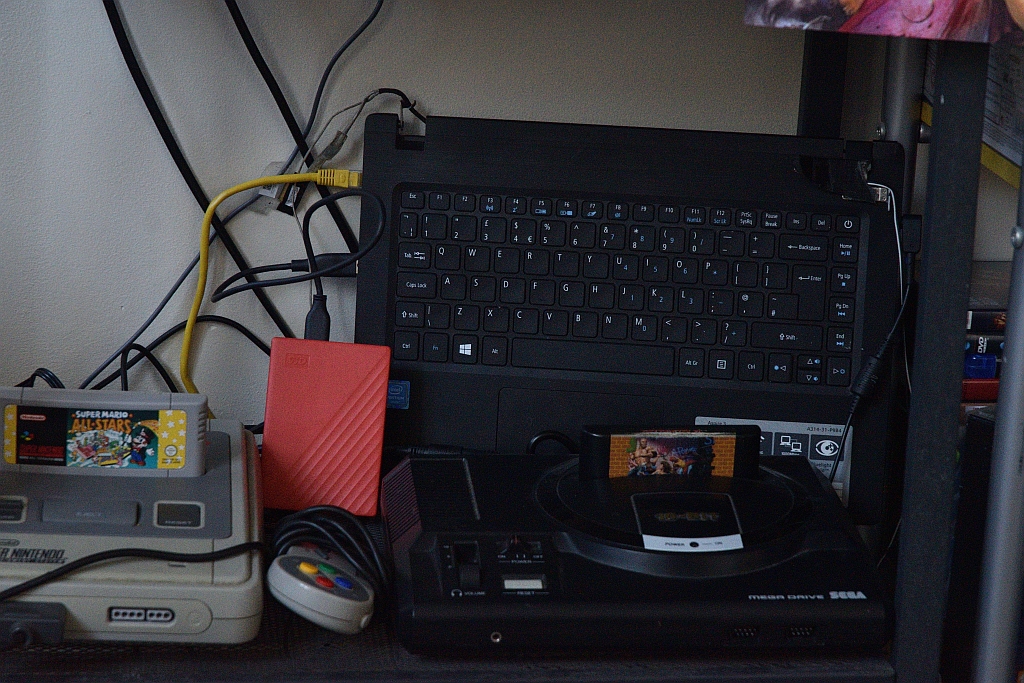

I ran the code on my desktop for a few hours as a final sanity check, then in a 20 minute window between posts, switched it off, transferred everything to a Raspberry pi and ran it from there. After a week, I moved it over to my home server, an old laptop with a broken screen. I ripped the screen off and shoved it next to my TV setup as that was in handy reach of a router and power. It's been there ever since. This has worked out tremendously well and it'll probably stay in this configuration for the rest of it's lifespan.

here's your server, bro

here's your server, bro

In the very beginning I wrote some code to follow back anyone that follows it, but it didn't really work right and diagnosing it would have taken time away from the parts that matter. If you suffered any weird notifications from the bot in the early days, my bad.

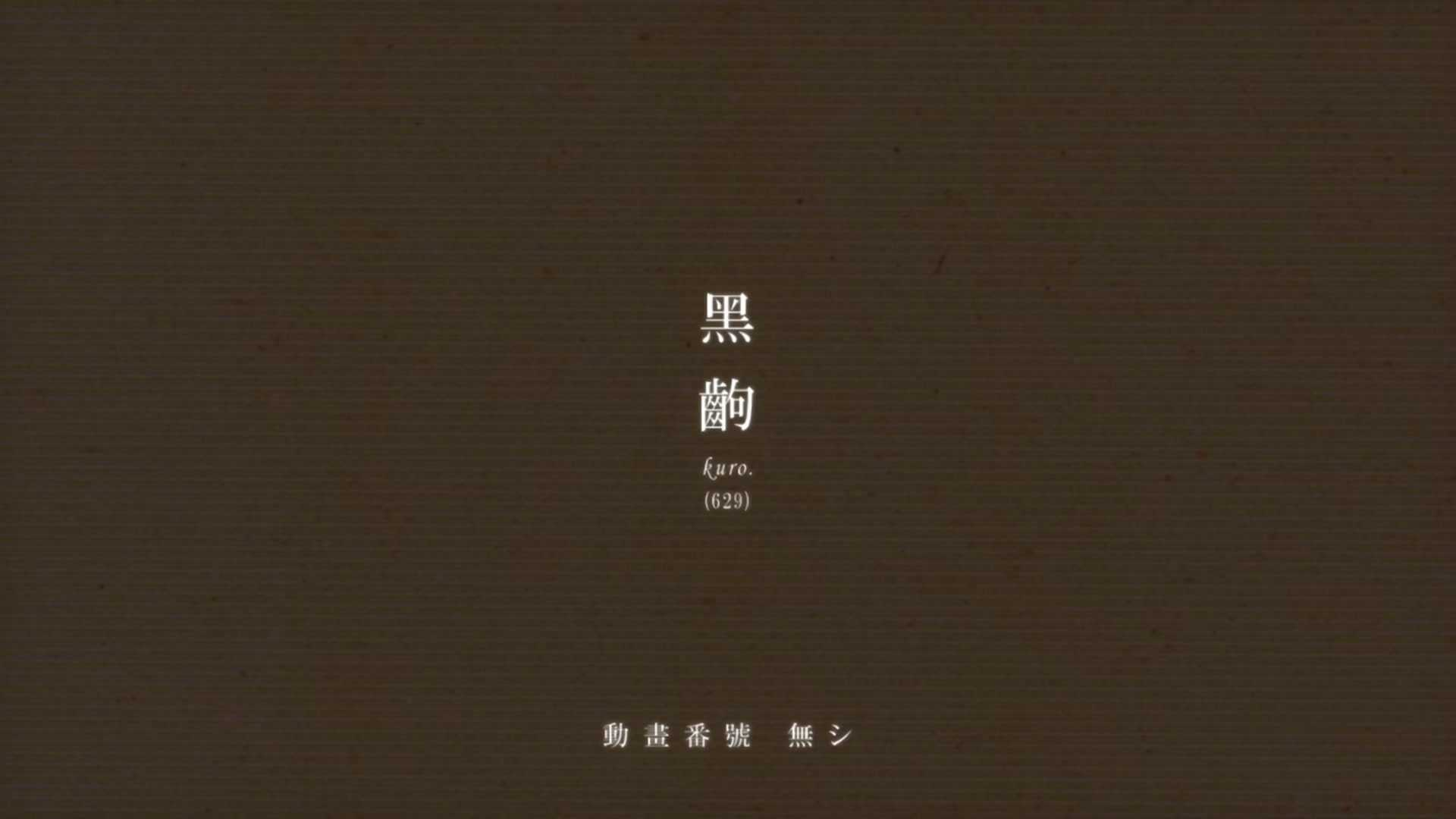

Stage 3 : Regret

Not long after it started, I noticed the results looked a bit ... shit, sometimes. The output had too many black frames.

Not this kind. But there are a lot of these, too

Not this kind. But there are a lot of these, too

Pans would start with the focal character uncomfortably out of frame, tilt shots would have a sliver of shoe or a fragment of head, characters caught mid blink, bad looking tweens, transitions stuck halfway. It looked baaaad.

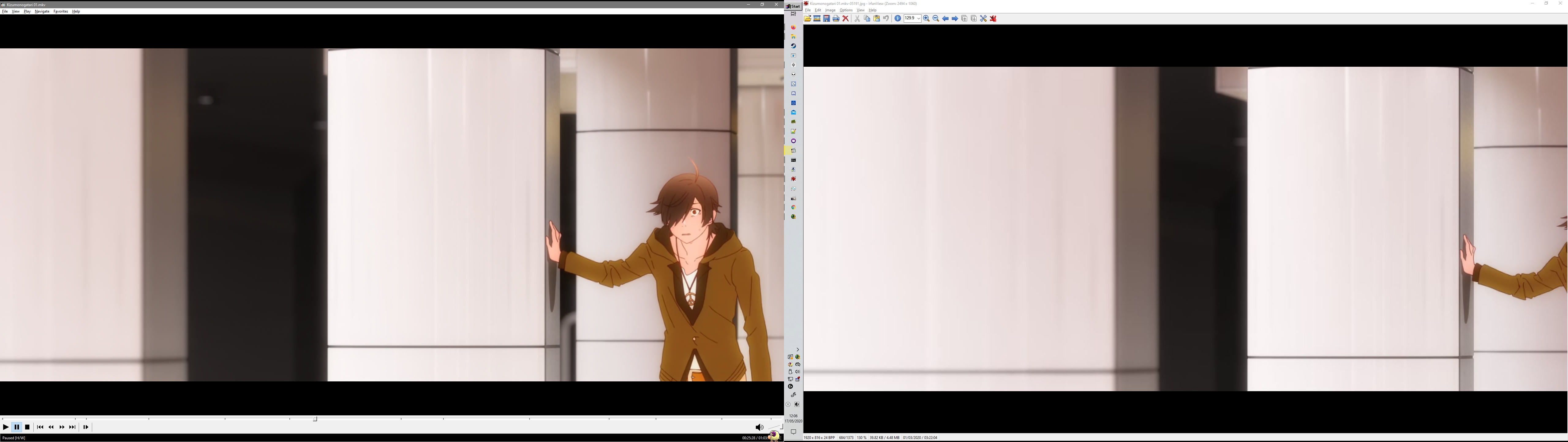

I anticipated the results wouldn't be amazing, but this sucked. I-frames was not the zero effort panacea I craved, but it was a great starting point. Some shorts worked, and the duds are usually only a handful of frames away from being ideal. Using a file rename tool, I separated the frames out, so there was some breathing room in the sequence to insert new frames.

So I've been sitting down and watching the series. Thanks to the 2020 Covid-19 shutdown, i've had a lot of time on my hands, and as my previous Strike Witches project proves, I seem to enjoy looking at thousands upon thousands of raw frames. With the video and the output folder next to each other, flicking through in step, I swapped frames out as I went. Taking a screenshot for every bad cut and pose, a 25 minute episode takes about an hour to fix up.

On the right is a frame as picked by the script, on the left is my correction. Much better.

spot the e-sheep

spot the e-sheep

One huge upside of this approach is that the original generated files still exist, so should I ever fall behind or lose interest, it can go back to using those and continue working, albeit at reduced quality.

Step 4 : Relief

It worked! As of this writing, it's been running for almost 2 months. Some scenes drag on for too long, some not long enough. That's not my fault, I didn't direct the damn thing. I've only made a few noticeable goofs in the data set so far. mostly getting confused and mixing frames up in long sequences. Lets hope it doesn't get copywrite banhammered anytime soon, hah.

Stuff

twitter image uploader script. Don't look too closely at it, it's a bit weird. the bot itself Just like the show itself, it may be NSFW on occasion.

all code featured on this page is under the wtfpl licence. You don't want to use it for your own purposes, but you CAN.